The Context Gap in Modern AI Systems

LLMs are frozen in both time and space.

Even the most advanced models don’t know:

- Where the user is located

- What timezone they’re in

- Which laws, regulations, or logistics apply locally

Unless that information is explicitly provided, the model can only infer — and inference without constraints is exactly where hallucinations emerge.

This limitation becomes especially risky in:

- Customer support chatbots

- Legal or compliance assistants

- E-commerce recommendation engines

- Autonomous AI agents interacting with real users

Context-aware RAG addresses this by enriching the pipeline before retrieval or generation happens. Instead of asking the model to guess, we provide it with ground truth — such as city, country, and timezone — so it can reason accurately.

Geolocation is one of the most reliable and lowest-friction ways to achieve this grounding.

Why Geolocation Reduces Hallucinations

Grounding as a Guardrail 🧱

In AI system design, grounding means anchoring model outputs to verifiable, external reality.

By injecting structured location data — city, region, country, timezone — into the system prompt or the retrieval layer, we constrain the model’s reasoning space. This acts as a guardrail against implausible or irrelevant answers.

For example:

- Business hours depend on timezone

- Legal advice depends on jurisdiction

- Shipping and tax rules vary by country and state

Without location context, hallucinations aren’t just possible — they’re statistically likely.

Disambiguation at Scale

Geolocation also solves ambiguity problems that pure semantic search can’t handle:

- Paris, France vs. Paris, Texas

- Employment law in California vs. New York

- VAT rules in the EU vs. U.S. sales tax

Advanced RAG pipelines discussed by search and observability platforms increasingly emphasize metadata-driven retrieval. AbstractAPI provides this metadata in milliseconds, making it feasible to inject before LLM inference without hurting Time to First Token (TTFT).

Strategy 1: Prompt Injection (The Easy Fix)

Best for: General chatbots, customer support assistants, internal productivity tools.

How It Works

- Detect the user’s IP address

- Resolve it to a precise geographic location

- Inject that information into the system prompt

This approach doesn’t require changes to your vector database or retriever logic. You simply give the model better instructions.

Example System Prompt

- You are a helpful assistant. The user is currently located in Berlin, Germany (Timezone: CET). Answer all questions using information relevant to this region.

Even this minimal context dramatically improves answers related to:

- Opening hours

- Local currencies

- Regional regulations

- Product or service availability

Fetching Location Data with AbstractAPI (Python)

The Abstract IP Geolocation API is purpose-built for fast, real-time enrichment and is commonly used as a pre-LLM context layer.

import requests

def get_user_location(ip_address: str) -> dict:

response = requests.get(

"https://ipgeolocation.abstractapi.com/v1/",

params={

"api_key": "YOUR_ABSTRACT_API_KEY",

"ip_address": ip_address

},

timeout=1

)

response.raise_for_status()

return response.json()

This request typically completes in under 100 ms globally, which is critical for conversational systems. As discussed in Abstract’s guide on API rate limits and performance, latency directly impacts perceived responsiveness and user trust.

👉 Related: Abstract IP Geolocation API

Strategy 2: Vector Database Metadata Filtering

Best for: Legal tech, real estate platforms, compliance systems, AI-powered search engines.

The Core Idea

Instead of letting the LLM see all documents, you filter them before retrieval using location metadata.

This ensures the model never even processes irrelevant content.

Hybrid Search in RAG Pipelines

Modern RAG systems increasingly rely on hybrid search:

- Semantic similarity via vector embeddings

- Structured filters via metadata

Instead of querying globally for “shipping laws”, you query:

- “shipping laws”

- AND country = "US"

- AND state = "CA"

This significantly improves precision and dramatically reduces hallucinated legal or regulatory advice.

Conceptual LangChain Example

# Conceptual example

retriever.get_relevant_documents(

query,

search_kwargs={

"filter": {

"country_code": user_location["country_code"]

}

}

)

LangChain’s metadata filtering and self-querying retrievers make this pattern easy to implement with vector stores like Pinecone, ChromaDB, or pgvector.

Implementation Tutorial: Python + AbstractAPI 🧑💻

Let’s walk through a simplified end-to-end flow.

Step 1: Install Dependencies

pip install requests langchain openai

Step 2: Fetch User Context

def get_user_context(ip_address: str) -> dict:

data = get_user_location(ip_address)

return {

"city": data.get("city"),

"country": data.get("country"),

"country_code": data.get("country_code"),

"timezone": data.get("timezone", {}).get("name")

}

Latency is critical here.

The IP lookup happens before LLM inference, so it must be fast. AbstractAPI is optimized for low-latency global responses, making it suitable for real-time AI agents.

Step 3: Inject Context into the RAG Chain

At this point, you can:

- Add location data to the system prompt

- Use it as metadata for vector retrieval

- Or combine both approaches

This flexibility is what makes geolocation such a powerful grounding layer in RAG architectures.

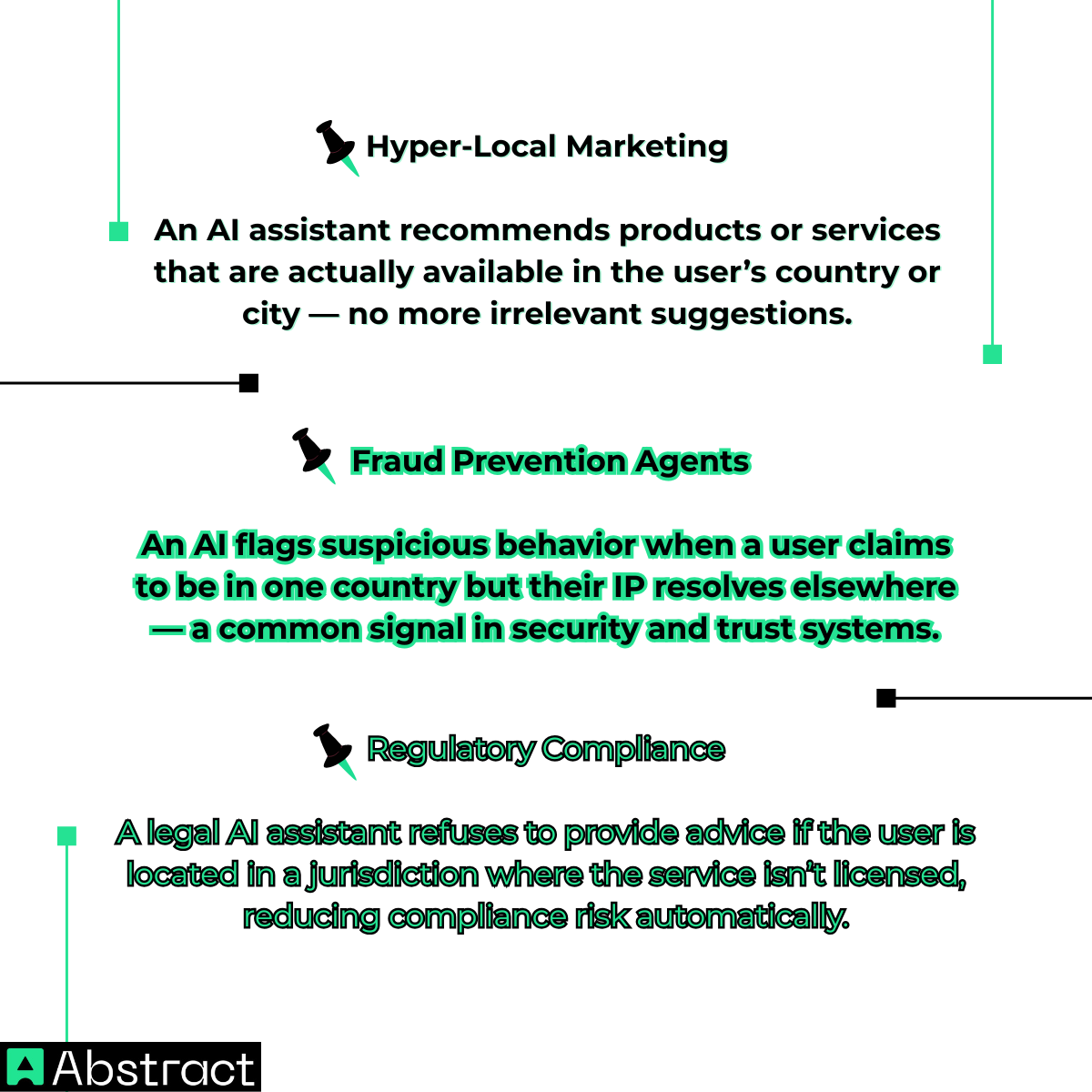

Real-World Use Cases

Conclusion: Grounding Turns RAG into Intelligence

RAG without context is essentially a smarter search engine.

RAG with context becomes an intelligent, reliable agent.

By enriching your pipeline with real-time geolocation data, you:

- Reduce hallucinations

- Improve relevance

- Enforce regulatory boundaries

- Increase user trust

The Abstract IP Geolocation API provides a fast, developer-friendly way to add this grounding layer — without sacrificing latency or architectural simplicity.

👉 Don’t let your AI guess. Ground it in reality.

Get started with a free API key from Abstract and build context-aware AI systems that understand not just what users ask — but where they are.