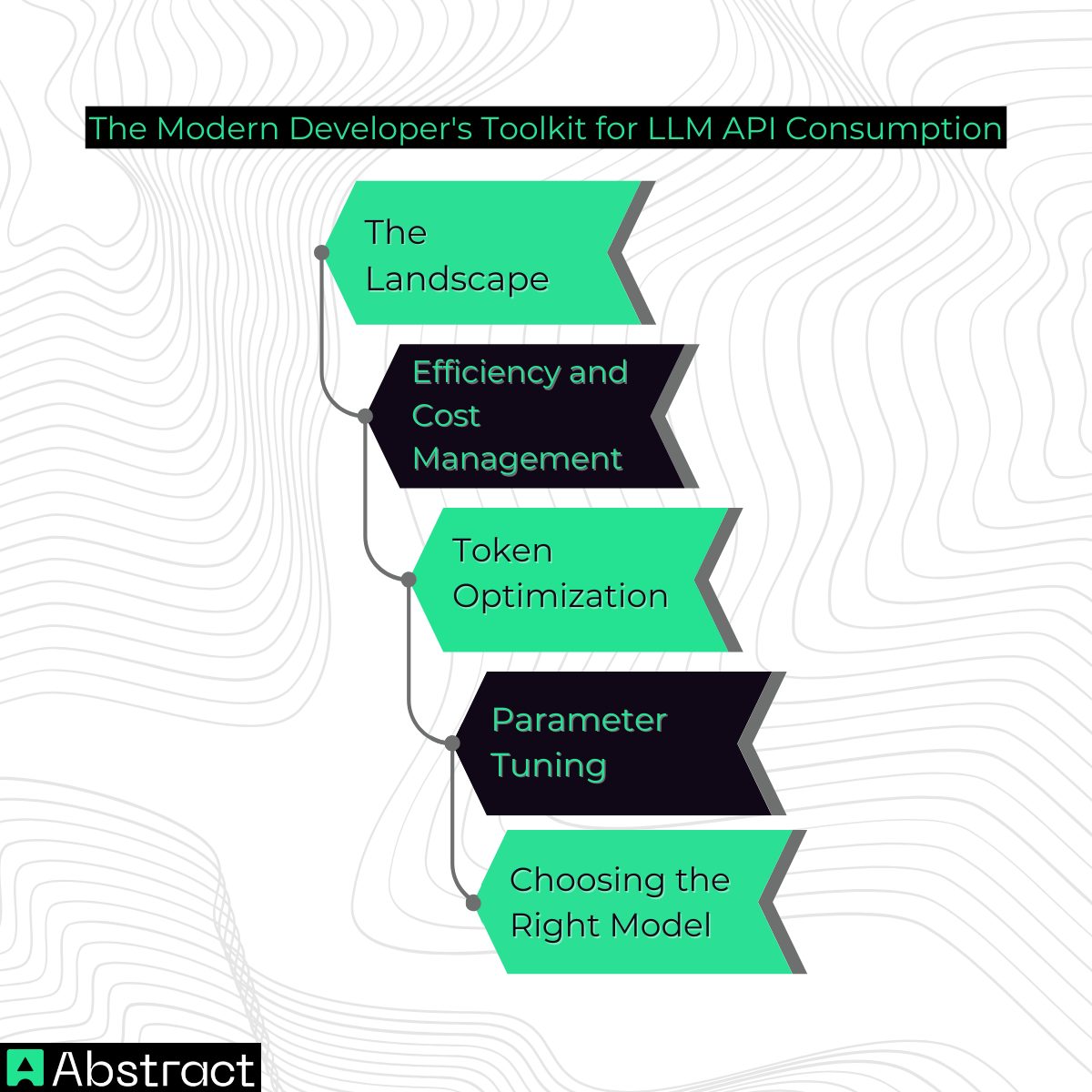

The Modern Developer's Toolkit for LLM API Consumption ⚙️

The Landscape

LLM APIs now come in multiple flavors:

- Proprietary models: OpenAI’s GPT series, Anthropic’s Claude, or Google’s Gemini, offering high performance, stability, and strong developer support.

- Open-source alternatives: Platforms like OpenRouter, Groq, or self-hosted instances using tools like Ollama, giving flexibility and control over data and costs.

Choosing the right API isn’t just about features. Consider latency, privacy, data residency, and cost trade-offs. For example, a small team processing sensitive client data might prefer a self-hosted LLM, while a cloud-hosted API may suit fast prototyping.

Efficiency and Cost Management 💸

At first, sending prompts feels cheap—but at scale, every token matters. Production-ready usage focuses on prompt efficiency, parameter tuning, and intelligent model selection.

Token Optimization ✂️

Token usage directly affects cost and speed. Optimize by:

- Crafting concise prompts and avoiding redundant context.

- Using system messages for global instructions instead of repeating them.

- Employing prompt templates to standardize and reuse structures.

Parameter Tuning 🎛️

Control LLM behavior with parameters:

- temperature: randomness of output.

- top_p: nucleus sampling to limit probability mass.

- stop_sequences: halt output at defined markers.

This ensures outputs are relevant and focused, avoiding verbose or off-topic results.

Choosing the Right Model 🧩

Not all tasks need the most advanced model:

- Classification or filtering → lightweight, fast models.

- Creative content generation → larger, more nuanced models.

Matching the model to the task saves cost and improves efficiency.

Real-world tip: A SaaS team reduced API costs by 40% simply by moving repetitive classification tasks to a smaller model and reserving the larger one for creative generation.

A Code-Level Guide to Securing LLM API Interactions 🔐

The New Threat Landscape ⚠️

Traditional security tools like Web Application Firewalls (WAFs) aren’t enough against LLM-specific threats. One of the most common is prompt injection, where malicious inputs attempt to override instructions.

The OWASP Top 10 for LLM Applications highlights risks like:

- Prompt injection: malicious user instructions that trick the model.

- Data exfiltration: LLM unintentionally leaking sensitive info.

Defense requires a layered, code-first approach.

Defense in Depth: Practical Techniques 🛡️

Input Validation & "Instructional Fencing"

Inspect user prompts before sending them to an LLM:

def sanitize_prompt(user_input: str) -> str:

dangerous_patterns = ["ignore previous", "disregard instructions", "system override"]

for pattern in dangerous_patterns:

if pattern.lower() in user_input.lower():

raise ValueError("Potential injection detected")

return user_input

This prevents malicious instructions from altering LLM behavior.

Output Encoding and Sanitization

Treat all LLM responses as untrusted data. Encode them to prevent XSS if rendered in a browser:

function encodeOutput(text) {

const div = document.createElement("div");

div.innerText = text;

return div.innerHTML;

}

Architectural Pattern: The AI Gateway/Filter 🏰

Implement a proxy layer between your app and the LLM API. This gateway can:

- Log interactions for auditing and monitoring.

- Remove sensitive information (PII, secrets).

- Enforce content moderation consistently across all calls.

This mirrors traditional API gateways but is tailored to AI-specific threats.

Pro tip: Centralizing moderation prevents inconsistent or accidental exposure of sensitive data across multiple clients.

Designing for the Agentic Era: Making Your APIs LLM-Ready 🤖

The Paradigm Shift

The next frontier isn’t just calling LLM APIs—it’s building APIs that autonomous AI agents can use efficiently. Systems that allow AI agents to interact seamlessly with your endpoints unlock autonomous workflows and intelligent orchestration.

Core Principles for LLM-Friendly API Design 🧭

Semantic Clarity

Use explicit, descriptive names: temperature_celsius is clearer than temp. LLMs (and humans) interpret precise language better, reducing misunderstandings.

Machine-Readable Documentation 📑

Your OpenAPI spec is no longer just for developers—it’s how LLMs learn your API. Provide:

- Detailed parameter descriptions.

- Example requests and responses.

- Context for constraints and expected values.

Actionable Error Messages 🚨

Error responses should guide self-correction:

{

"error": "Invalid date format",

"expected_format": "YYYY-MM-DD"

}

This allows AI agents to adjust queries automatically, avoiding dead ends.

Documentation Structure

Consistency matters:

- Predictable headings and sections.

- Uniform naming conventions.

- Structured examples.

This helps LLMs form a mental map of your API’s capabilities, improving accuracy in autonomous calls.

Conclusion: From API Caller to AI Architect 🏗️

Mastering LLM APIs in 2025 isn’t just about sending prompts—it’s about building efficient, secure, and AI-ready systems.

By evolving from a simple API consumer to an AI architect, you lay the foundation for software where humans, applications, and AI agents collaborate seamlessly.

The future of intelligent systems starts with production-ready LLM API practices—this playbook is your guide.