The Data Gravity Problem: ETL Is the Bottleneck

The real-world scenario

You’re managing:

- Hundreds of millions (or billions) of web log rows

- A Snowflake warehouse used by BI, ML, and analytics teams

- A requirement to add country and city columns based on IP addresses

The old way (ETL)

Traditionally, teams would:

- Export IPs from Snowflake to S3

- Run a Python enrichment job on EC2

- Call a third-party IP geolocation API

- Write results back into Snowflake

This approach fails at enterprise scale:

❌ Slow and operationally complex

❌ Expands your security attack surface

❌ Creates stale, batch-only data

❌ Breaks data lineage and governance

The modern way (ELT)

Instead of moving data to the API, bring the API to the data.

Using Snowflake External Access Integrations, we can call Abstract API directly from inside Snowflake, enriching data in place with SQL-driven workflows.

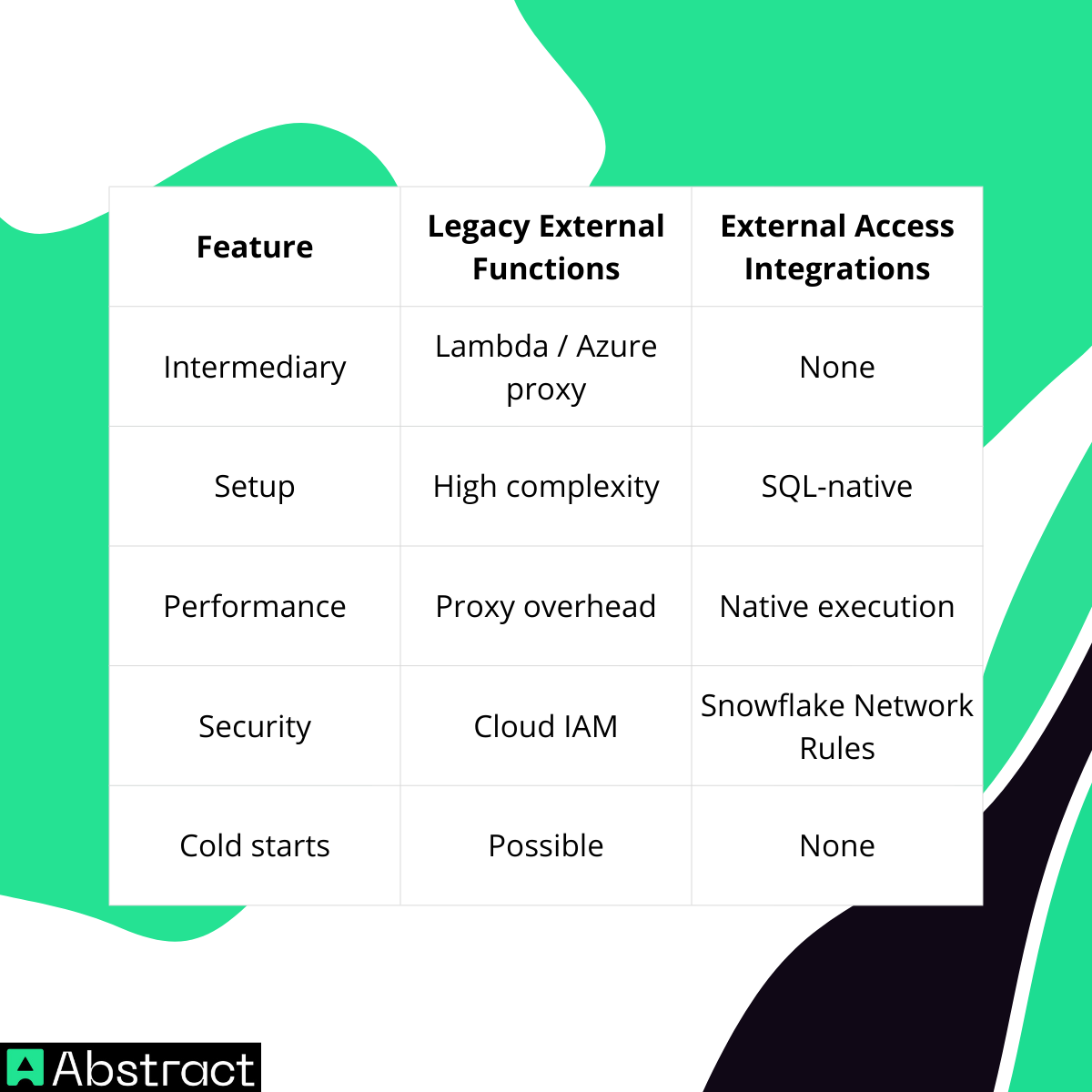

Architecture Shift: Proxy-Based vs Native Snowflake Access

- Terminology note: This article references “External Functions” in the headline for historical and SEO reasons. In practice, the implementation uses External Access Integrations, the modern and recommended evolution of External Functions in Snowflake.

Legacy approach: External Functions + Proxy

Historically, Snowflake External Functions required:

- AWS Lambda or Azure Functions

- API Gateway

- IAM role orchestration

- Additional networking layers

This proxy-based architecture added:

- Latency

- Cold starts

- Operational overhead

- Debugging complexity

Modern approach: External Access Integrations (Recommended)

Snowflake’s External Access Integrations allow Python UDFs running inside Snowflake to securely access the public internet—without any proxy.

The modern enrichment flow

Snowflake Table (IP addresses)

↓

Vectorized Python UDF (batch processing)

↓

↓

Enriched columns (Country, City, Postal Code)

Why this wins at enterprise scale

Security Setup: The Enterprise Configuration Model 🔐

Enterprise enrichment starts with controlled egress.

Snowflake enforces this using three explicit, auditable objects.

Network Rule (Egress Control)

Defines exactly which external domains Snowflake is allowed to reach.

CREATE OR REPLACE NETWORK RULE abstract_api_network_rule

MODE = EGRESS

TYPE = HOST_PORT

VALUE_LIST = ('ipgeolocation.abstractapi.com');

This ensures Snowflake cannot access the open internet, only Abstract API.

Secret Object (API Key Management)

Hardcoding API keys violates every enterprise security policy.

Snowflake Secrets encrypt and isolate credentials securely.

CREATE OR REPLACE SECRET abstract_api_key

TYPE = GENERIC_STRING

SECRET_STRING = 'YOUR_ABSTRACT_API_KEY_HERE';

External Access Integration (The Binder)

Binds network rules and secrets into a single governed object.

CREATE OR REPLACE EXTERNAL ACCESS INTEGRATION abstract_enrichment_integration

ALLOWED_NETWORK_RULES = (abstract_api_network_rule)

ALLOWED_AUTHENTICATION_SECRETS = (abstract_api_key)

ENABLED = TRUE;

This is what you grant to analytics engineers—not raw network access.

The Vectorized Python UDF: The Performance Multiplier 🏎️💨

The critical mistake to avoid

❌ Scalar Python UDFs

A scalar UDF makes one API call per row.

- 1M rows × 100ms per request = ~27 hours

- Completely unusable at scale

The correct solution: Vectorized UDFs + asyncio

Snowflake vectorized UDFs:

- Receive data as a pandas.Series

- Process batches (e.g., 500–1000 IPs)

- Return results as a vector

⚠️ Important nuance: Abstract API validates one IP per request. Vectorization does not mean bulk requests—it means parallel orchestration.

Python implementation (production-grade)

import _snowflake

import pandas as pd

import asyncio

import aiohttp

from _snowflake import vectorized

api_key = _snowflake.get_generic_secret_string('abstract_api_key')

async def fetch_ip_data(session, ip):

url = f"https://ipgeolocation.abstractapi.com/v1/?api_key={api_key}&ip_address={ip}"

try:

async with session.get(url, timeout=5) as response:

if response.status == 200:

return await response.json()

elif response.status == 429:

return {"status": "rate_limited"}

return {"status": f"error_{response.status}"}

except Exception:

return {"status": "timeout"}

async def process_batch(ips):

async with aiohttp.ClientSession() as session:

tasks = [fetch_ip_data(session, ip) for ip in ips]

return await asyncio.gather(*tasks)

@vectorized(input=pd.Series)

def enrich_ip_intelligence(ips):

loop = asyncio.get_event_loop()

results = loop.run_until_complete(process_batch(ips.tolist()))

return pd.Series([str(r) for r in results])

Managing Scale, Cost, and Reliability 💰📉

1. Cache once, enrich forever (IP Dimension Table)

Web logs are highly repetitive.

Best practice:

- Create DIM_IP_GEOLOCATION

- Enrich only new IPs

- Join back to logs

This pattern routinely reduces API usage by 80–90%.

2. Batch size tuning

Avoid timeouts by controlling batch size.

CREATE OR REPLACE FUNCTION ENRICH_IP_INTELLIGENCE(ip_address STRING)

RETURNS STRING

LANGUAGE PYTHON

RUNTIME_VERSION = '3.8'

PACKAGES = ('pandas', 'aiohttp')

HANDLER = 'enrich_ip_intelligence'

EXTERNAL_ACCESS_INTEGRATIONS = (abstract_enrichment_integration)

SECRETS = ('abstract_api_key' = abstract_api_key)

MAX_BATCH_SIZE_ROWS = 500;

3. Incremental enrichment with Streams & Tasks

For continuous ingestion:

- Use Snowflake Streams to detect new IPs

- Use Tasks to enrich incrementally

- Avoid reprocessing historical data

This approach integrates cleanly with dbt models and enterprise ELT pipelines.

The Magic Moment: SQL-Based Enrichment ✨

UPDATE WEB_LOGS

SET

COUNTRY = PARSE_JSON(ENRICH_IP_INTELLIGENCE(IP_ADDRESS)):country::string,

CITY = PARSE_JSON(ENRICH_IP_INTELLIGENCE(IP_ADDRESS)):city::string

WHERE COUNTRY IS NULL;

- Millions of rows enriched.

- No exports.

- No proxies.

- Pure SQL.

Conclusion: Snowflake as a Live Enrichment Engine 🎯

By combining:

- Snowflake External Access Integrations

- Vectorized Python UDFs

- Abstract API’s high-scale IP intelligence

You’ve transformed Snowflake into a native, enterprise-grade data enrichment engine.

Key takeaways

- Don’t export data—enrich in place

- Avoid proxies—use External Access Integrations

- Vectorize everything

- Cache IP intelligence

- Scale safely with concurrency-aware APIs

👉 Get your Abstract API key and start enriching your Snowflake IP geolocation data today.

👉 For billions of rows or historical backfills, contact Abstract API for dedicated high-throughput rate limits.