The Problem with the Static Status Quo

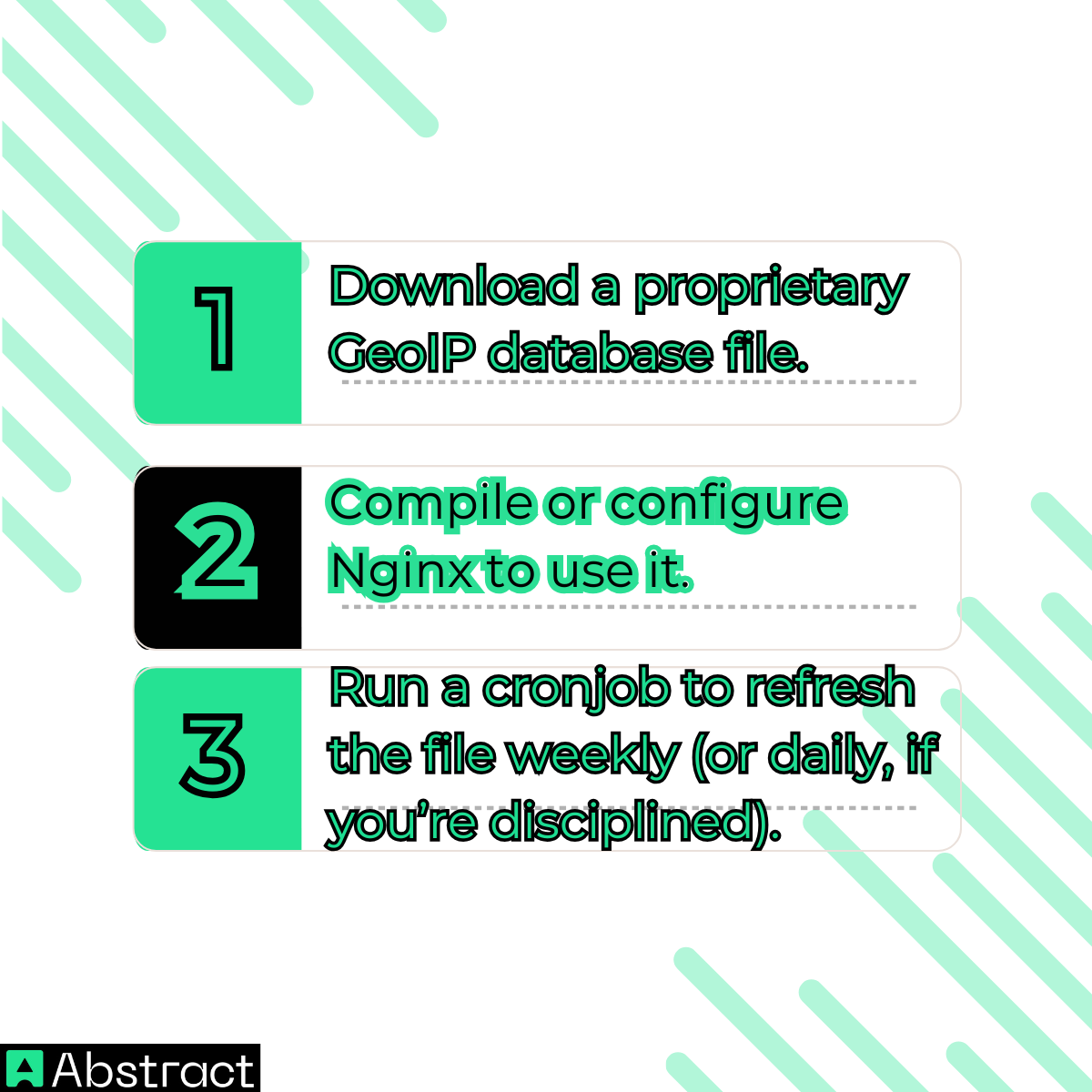

The legacy geo-blocking workflow usually looks like this:

This model breaks down fast:

- Accuracy: IP data becomes stale continuously, not weekly.

- Maintenance: Cronjobs fail. Files corrupt. Formats change.

- Rigidity: Updating rules often requires config reloads or fleet-wide syncs.

Legacy modules like ngx_http_geoip_module are fast, but they are always behind reality.

The Modern Alternative: Just-in-Time Geolocation at the Edge

Instead of managing databases, we move to a Just-in-Time lookup model.

Using OpenResty, we perform an asynchronous lookup against AbstractAPI only once per IP address, then cache the result in Nginx shared memory (RAM). Every subsequent request from that IP is evaluated locally, without touching the network.

This architecture is:

- Zero-maintenance – No files, no cronjobs, no recompiles

- Real-time – Always backed by fresh API data

- Extremely fast – After the first request, decisions happen in microseconds

Most importantly, this logic runs before the request ever reaches your application.

Architecture Overview: The Intelligent Edge

The flow follows a classic cache-aside pattern, implemented directly inside the Nginx event loop:

- Incoming Request

A client hits your Nginx edge or load balancer.

- Shared Memory Lookup

Nginx checks lua_shared_dict (atomic, in-RAM storage) using the client IP.

- Cache HIT

Country code is found → allow or deny immediately.

Latency: ~0ms

- Cache MISS

Nginx performs a non-blocking HTTP request to AbstractAPI.

- Ingestion

The country code is cached in shared memory with a TTL (e.g., 24h).

- Enforcement

Request is proxied upstream or terminated with 403 Forbidden.

Once cached, performance is statistically indistinguishable from native modules.

Prerequisites & Setup

Why OpenResty (Not Vanilla Nginx)

While vanilla Nginx can embed Lua, OpenResty is purpose-built for this use case. It bundles:

- Nginx

- LuaJIT

- Non-blocking I/O libraries

- Tight integration with the event loop

This is mandatory for high-performance API integration.

Install OpenResty

sudo apt-get install openresty

Dependencies

We use lua-resty-http.

This is critical. Standard Lua sockets (socket.http) block the worker process and will destroy throughput. lua-resty-http is fully event-driven and safe at scale.

Get an API Key

Sign up at AbstractAPI and generate an IP Geolocation API key. Each Nginx instance will use it for cache misses.

The Implementation: A Lua-Powered Firewall

Configure Shared Memory

Inside the http block of nginx.conf:

- ngx.shared.DICT is atomic, lock-free, and shared across all workers.

The Lua Logic (geo_logic.lua)

local _M = {}

function _M.check_access()

local cache = ngx.shared.ip_cache

local client_ip = ngx.var.remote_addr

-- 1. Check shared memory

local country = cache:get(client_ip)

if not country then

-- 2. Cache MISS: query AbstractAPI

local http = require "resty.http"

local cjson = require "cjson.safe"

local httpc = http.new()

httpc:set_timeout(1000)

local res, err = httpc:request_uri(

"https://ipgeolocation.abstractapi.com/v1/",

{

query = {

api_key = "YOUR_ABSTRACT_API_KEY",

ip_address = client_ip

},

method = "GET",

ssl_verify = false -- enable with proper CA certs in production

}

)

if res and res.status == 200 then

local body = cjson.decode(res.body)

if body and body.country_code then

country = body.country_code

cache:set(client_ip, country, 86400) -- 24h TTL

end

else

-- Fail-open: preserve availability

ngx.log(ngx.ERR, "AbstractAPI lookup failed: ", err)

return

end

end

-- 3. Enforcement

local blocked = { CN = true, RU = true }

if blocked[country] then

ngx.log(ngx.WARN, "Blocked IP ", client_ip, " from ", country)

ngx.exit(ngx.HTTP_FORBIDDEN)

end

end

return _M

This logic runs before your application sees the request.

Performance Tuning for High Traffic

Keepalive Connections

Each cache miss involves TLS negotiation. To reduce overhead, connections should be reused.

- Note: request_uri() manages connections internally. For extreme scale and maximum control, advanced setups may prefer httpc:connect() + request() to explicitly manage keepalive pools.

Conceptually:

httpc:set_keepalive(60000, 10)

This reduces latency on cache misses by reusing encrypted connections.

Fail-Open vs. Fail-Closed

You must choose your failure mode:

- Fail-Open (Recommended)

If the API is unreachable, allow traffic. Prioritizes availability and UX.

- Fail-Closed

Block on failure. Suitable for regulated or high-security environments.

This decision should match your threat model.

Cache Eviction Strategy

Consumer IPs rotate frequently via DHCP (often within 24–48 hours). A 24-hour TTL strikes a balance between accuracy and cache efficiency, preventing false positives when IPs change hands.

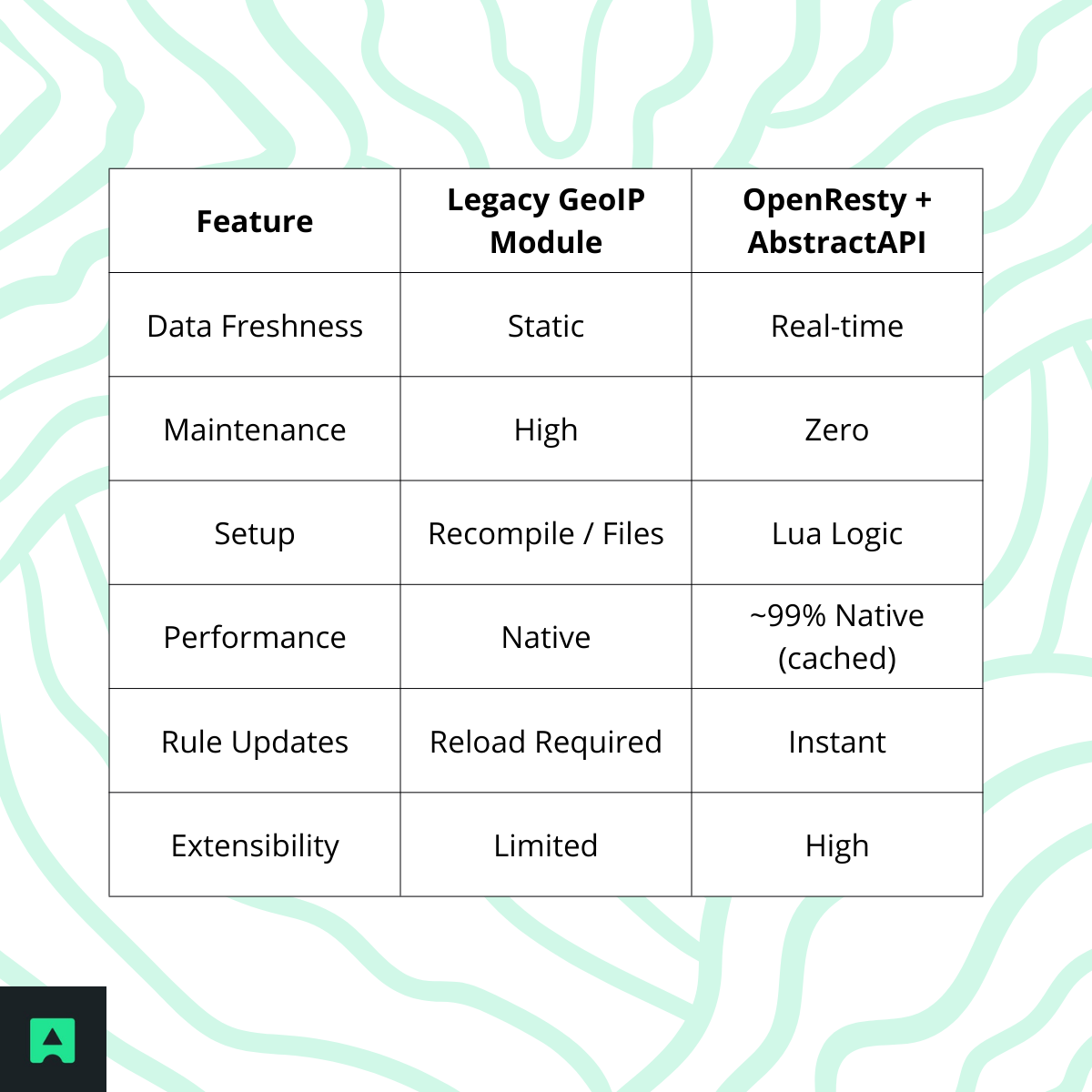

Nginx Module vs. API-Driven Geo-Blocking

After the first request, performance differences are negligible in real-world traffic.

Strategic Advantages of the Lua Approach

By enforcing geo-blocking at the Nginx layer, you are practicing Defense in Depth. Malicious or unwanted traffic is rejected before it consumes:

- Application threads

- Database connections

- Cache capacity

Using AbstractAPI also unlocks future logic: ASN blocking, data-center detection, VPN/proxy signals — all without changing your infrastructure model.

Conclusion

We’ve moved geo-blocking from a brittle, file-based system into a real-time, edge-native firewall.

With OpenResty and AbstractAPI, you get:

- Always-fresh IP intelligence

- Sub-millisecond enforcement

- Zero operational overhead

This is how modern infrastructure protects itself — fast, accurate, and invisible to legitimate users.

Ready to harden your edge? Secure your infrastructure with Abstract’s real-time IP Intelligence data. 🛡️