The synchronous trap 🧱

A traditional Kafka consumer processes messages one at a time:

- Read a message

- Call an external API

- Wait for the response

- Process the next message

If the external API takes 100 ms, your consumer is effectively capped at 10 messages per second. No amount of Kafka partitions can save you if every consumer thread is blocked waiting on I/O.

For FinTech workloads — where throughput often reaches thousands of events per second — this architecture collapses under real-world traffic.

The async solution 🚀

The fix is asynchronous, non-blocking I/O.

Instead of waiting for each validation call to finish, you:

- Launch dozens or hundreds of API calls concurrently

- Let the event loop process responses as they arrive

- Keep CPU cores busy instead of idle

Using Python’s asyncio, modern Kafka frameworks, and high-performance APIs like AbstractAPI, you can turn a fragile pipeline into a streaming powerhouse.

In this article, you’ll build an end-to-end async validation pipeline using:

- FastStream (async-native Kafka framework)

- Pydantic for schema validation

- aiohttp for non-blocking API calls

- Dead Letter Queues (DLQs) for fault tolerance

The Modern Stack: FastStream + Pydantic 🧠

Before writing a single line of code, let’s talk about tooling — because using the wrong Kafka client can silently kill performance.

Why not kafka-python?

kafka-python is synchronous and thread-based. It was great years ago, but in async systems it introduces blocking behavior that negates the benefits of asyncio.

For high-throughput pipelines, you want:

- Async I/O

- Native await support

- Structured message validation

- First-class error handling

Enter FastStream ⚡

FastStream is a modern streaming framework built on top of aiokafka. It’s designed for event-driven Python systems and integrates seamlessly with asyncio.

Key advantages:

- Async-native Kafka consumers and producers

- Built-in Pydantic schema validation

- Declarative routing between topics

- Graceful exception handling (perfect for DLQs)

This makes FastStream ideal for kafka message validation scenarios where correctness and throughput are equally important.

- If malformed JSON or incorrect data types appear in the stream, FastStream rejects them before your business logic even runs — saving CPU cycles and preventing downstream corruption.

Architecture Pattern: Async Validation Pipeline 🏗️

Let’s define the architecture you’ll implement.

Stream topology

Input Topic: raw-transactions. Contains unvalidated payment events.

Processor: Python FastStream service. Consumes events, validates them asynchronously.

Validation Layers:

- Schema Validation → Pydantic (structure & types)

- Data Validation → AbstractAPI (IP intelligence)

Output Topics:

✅ validated-transactions → Safe events forwarded to payment processors

🚨 fraud-alerts or dead-letter-queue → Risky or failed validations

Business rule example

A simple but effective fraud heuristic:

- If the IP country does not match the billing country, flag the transaction.

This pattern is widely used in FinTech fraud detection systems, especially for early-stage filtering before heavier ML models kick in.

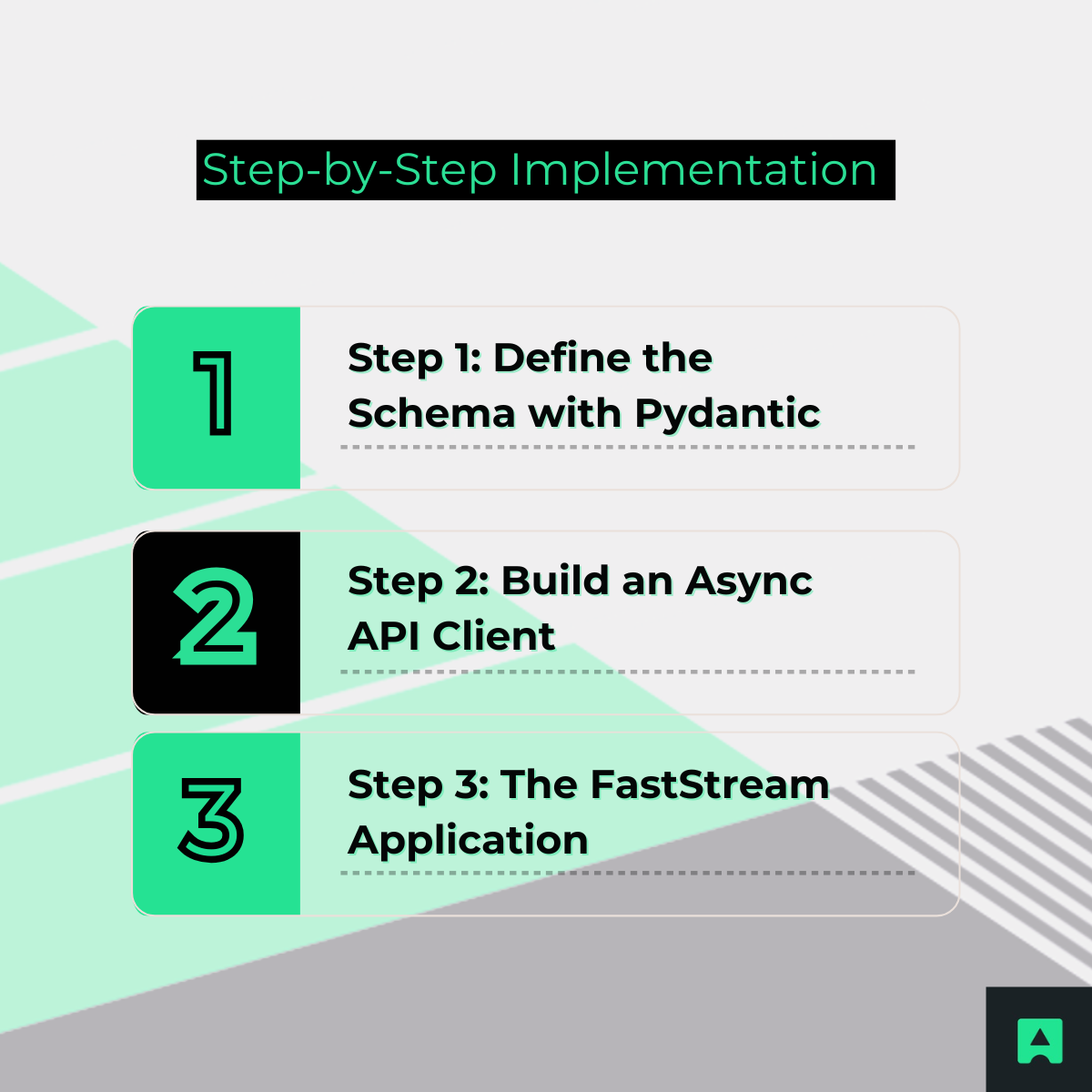

Step-by-Step Implementation 🧩

Step 1: Define the Schema with Pydantic

Schema validation ensures that incoming messages have the correct structure before any expensive processing happens.

from pydantic import BaseModel

class TransactionModel(BaseModel):

user_id: str

ip_address: str

amount: float

currency: str

billing_country: str

This handles:

- Missing fields

- Incorrect data types

- Malformed JSON payloads

💡 Important distinction:

- Schema validation → Is the data shaped correctly? (Pydantic)

- Data validation → Is the data trustworthy? (AbstractAPI)

Step 2: Build an Async API Client

This is where most systems fail.

❌ What not to do

import requests

requests.get("https://api.abstractapi.com/...")

This blocks the event loop. One request = one frozen consumer.

✅ The correct async approach

Use aiohttp or httpx in async mode.

import aiohttp

ABSTRACT_API_KEY = "your_api_key_here"

async def validate_ip_async(session: aiohttp.ClientSession, ip: str):

url = "https://ipgeolocation.abstractapi.com/v1/"

params = {

"api_key": ABSTRACT_API_KEY,

"ip_address": ip

}

async with session.get(url, params=params, timeout=5) as response:

response.raise_for_status()

return await response.json()

Connection pooling matters 🧠

Creating a new HTTP connection per message is expensive. Always reuse the ClientSession to enable TCP connection pooling — a massive performance win at scale.

This is especially important when integrating with high-volume async APIs like AbstractAPI, which are optimized for concurrent requests.

👉 Learn more about AbstractAPI’s IP intelligence features here.

Step 3: The FastStream Application

Now let’s wire everything together.

from faststream import FastStream

from faststream.kafka import KafkaBroker

import aiohttp

import asyncio

broker = KafkaBroker("localhost:9092")

app = FastStream(broker)

session: aiohttp.ClientSession | None = None

@app.on_startup

async def startup():

global session

session = aiohttp.ClientSession()

@app.on_shutdown

async def shutdown():

await session.close()

@broker.subscriber("raw-transactions")

@broker.publisher("validated-transactions")

async def handle_transaction(msg: TransactionModel):

geo_data = await validate_ip_async(session, msg.ip_address)

if geo_data["security"]["is_vpn"]:

raise ValueError("VPN detected")

if geo_data["country_code"] != msg.billing_country:

raise ValueError("Country mismatch")

return msg

What’s happening here:

- Messages are consumed asynchronously

- External API calls do not block the consumer

- Valid messages flow downstream automatically

- Risky transactions raise exceptions (handled next 👇)

Advanced Pattern: Dead Letter Queues (DLQ) ☠️

In FinTech, you never drop data.

If validation fails due to:

- API timeouts

- Data inconsistencies

- Fraud signals

…the event must be preserved for manual review or reprocessing.

What is a DLQ?

A Dead Letter Queue is a Kafka topic that stores messages that couldn’t be processed successfully. This allows:

- Post-mortem analysis

- Replay after bug fixes

- Regulatory audit trails

DLQ handling in FastStream

FastStream allows exception-based routing:

from faststream.exceptions import ValidationError

@broker.subscriber(

"raw-transactions",

retry=3,

dead_letter_topic="dead-letter-queue"

)

async def handle_transaction(msg: TransactionModel):

...

After retries are exhausted, the message is automatically routed to the DLQ — without crashing the consumer or blocking the stream.

This pattern is essential for financial systems under compliance constraints.

Scaling & Concurrency Control 🔧

Async doesn’t mean infinite concurrency.

If you fire unlimited API calls, you’ll hit:

- API rate limits (HTTP 429)

- Network saturation

- Memory pressure

Controlled concurrency with Semaphores

semaphore = asyncio.Semaphore(100)

async def validate_with_limit(session, ip):

async with semaphore:

return await validate_ip_async(session, ip)

This caps the number of concurrent API calls while still allowing hundreds of messages per second per worker.

💡 AbstractAPI’s infrastructure is designed for high concurrency, but responsible rate limiting ensures predictable latency and cost control.

👉 Related reading: https://www.abstractapi.com/guides/api-glossary/what-is-an-api-rate-limit

Conclusion: From Bottleneck to Powerhouse ⚡

Let’s recap what you’ve built:

🚫 Eliminated blocking I/O from Kafka consumers

🧬 Added schema validation with Pydantic

🌍 Integrated async IP intelligence with AbstractAPI

☠️ Implemented Dead Letter Queues for resilience

🚀 Scaled from ~10 msg/sec to 1,000+ msg/sec

This architecture is battle-tested for:

- Payment authorization pipelines

- Fraud detection engines

- Real-time geofencing

- Account takeover prevention

Final takeaway

High-volume streams demand non-blocking design and reliable external APIs. By combining FastStream, AsyncIO, and AbstractAPI’s high-performance data validation services, you can build pipelines that scale confidently under real-world FinTech workloads.

👉 Explore more async-ready APIs at https://www.abstractapi.com and power your next streaming system with data you can trust. 💙